Real-Time Rendering Optimization

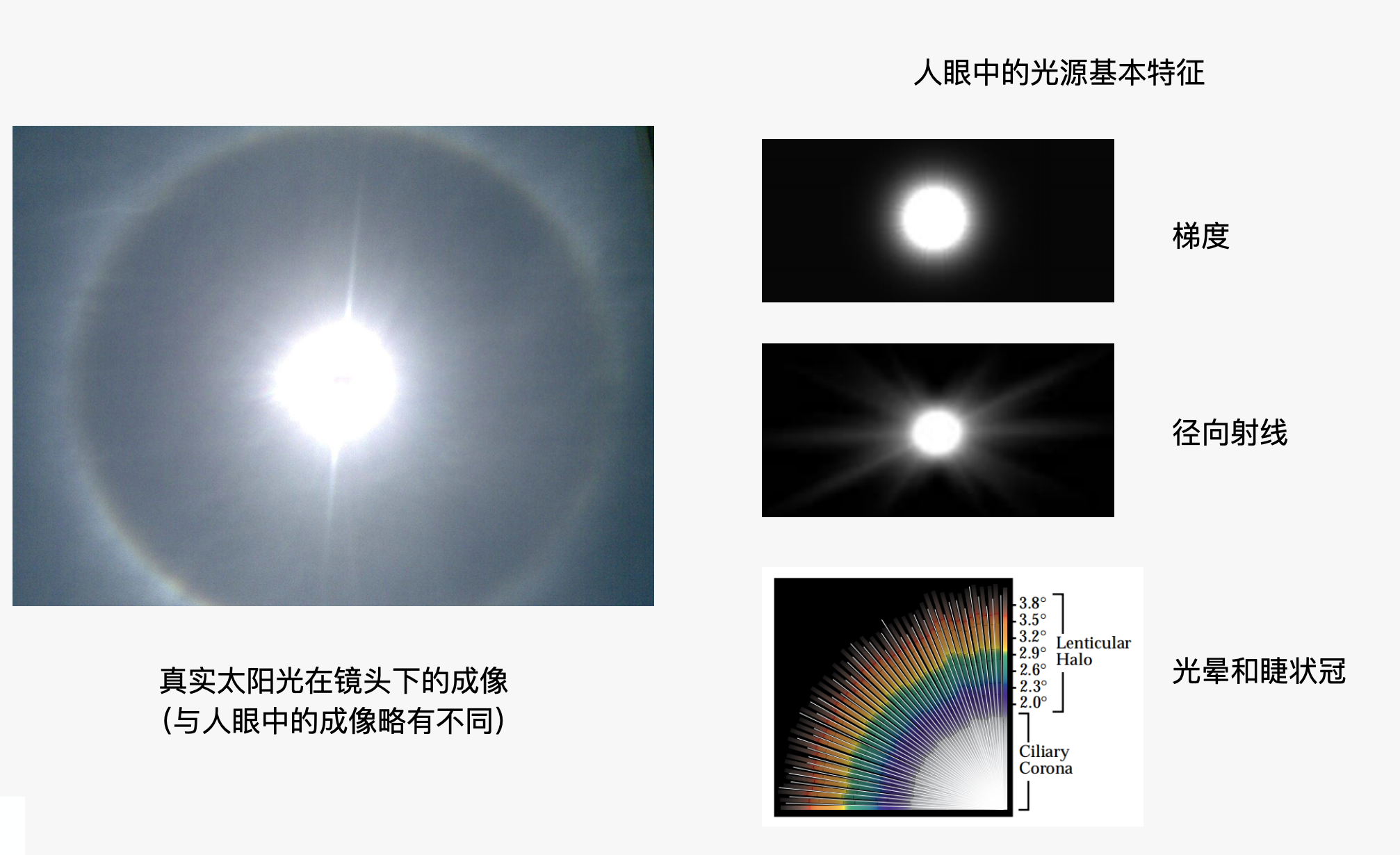

In terms of rendering technology, we innovatively proposed a self-emitting object rendering method based on post-processing by deeply analyzing the visual characteristics of real light sources. This method not only avoids complex rendering calculations for indirect light shade, but also achieves more realistic visual effects.

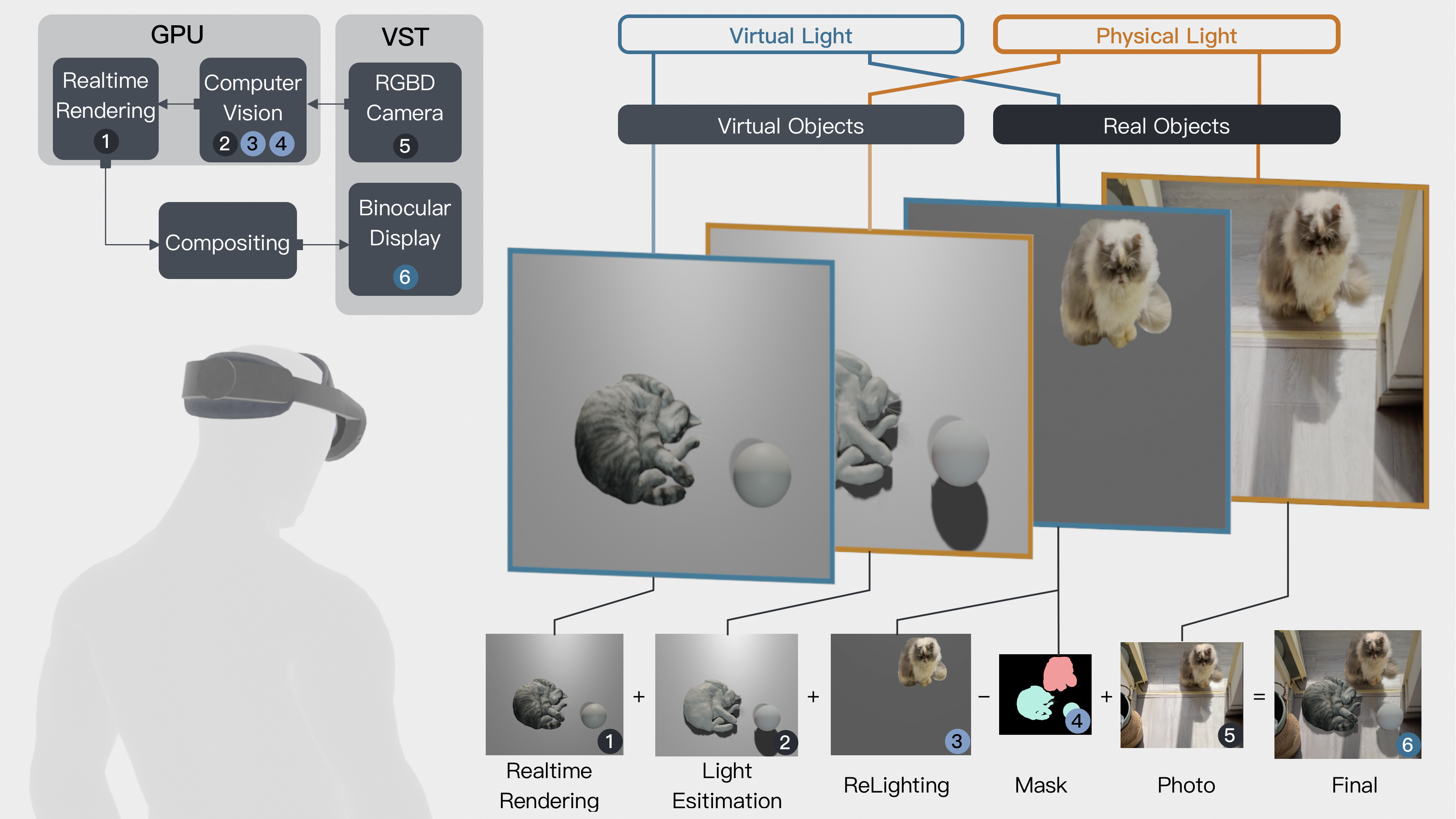

At the same time, we built a controllable light experimental environment based on the intelligent lighting system (Yeelight), and established an accurate mapping relationship between physical light parameters and rendering parameters through systematic parameter matching tests, providing reliable technical support for lighting consistency in mixed reality environments.